Layer 3 -- My study notes and study technique

Page 1 of 1

Layer 3 -- My study notes and study technique

Layer 3 -- My study notes and study technique

There are plenty of ways to study for an exam, and plenty of ways to prepare for an exam. This is just one of the techniques you can use, and this is the technique that is working very well for me. Since this is a studygroup i will not explain the details, i will just share the way im studying and also my study notes along the way through each CCIE RSv5 topic. I Think we all can agree that there is going to be some kind of dedication needed to pass this exam. And for me that dedication is Writing down everytime i study what i learn and which topics i studied.

All-in-all though I would say that i'm roughly 50% through with my studies Before I will book the exam. So it's still a long journey! But I recently got asked how to prepare for the exam at Another Place so i will just add it in here as well for the information. So i quote:

While studying for the CCIE i tend to write a lot in my study-document to help with the learning process and to keep me motivated. But basically this is how I study and I quote my "little" document:

General study technique that eventually will make me a master of the CCIE RSv5 topics.

In the beginning i thought that i could apply the Layer 2 study technique to Layer 3 studies as well. It worked for some topics while others were impossible to find on the Cisco-website or in any configuration guide at all.

So i turned to using the same approach and use the configuration and technology explanations of the IOS 15.3T release for topics that could be found, and other theory resources for topics that was not on the cisco-website.

My general three basic steps are:

Repeat this for each and every topic that is in the CCIE RSv5 LAB blueprint. Layer 3 technologies consists of most part of the CCIE LAB so it's going to take a lot of time to prepare for this! Estimated the Layer 3 configuration is about 85% of the scope of the exam.

As a preparation for CCIE i have also noted my preparation time in this document listed by a per-technology basis. It will keep me reminded about how much time is invested in each topic in case i need to go back and study some more.

I will approach this lab by learning from scratch, meaning that I will read all design documents and all release notes about all the technologies that are listed in the CCIE blueprint just to be sure I at least have a decent chance of remembering reading about it in case i get tested on it during the lab. This also means that i may read a lot of basic-non CCIE level of theory to just see if I've missed anything.

For instance, i feel extremely confident in general routing such as (EIGRP, OSPF, BGP, RIP, Static) but I will still read through every "core" document by cisco on the technologies I can be tested on.

Note to self: Although my plan is to use the INE Study plan that Brian McGahan reordered for the CCIE RSv5 Lab blueprint i will have to make changes to the study plan and in which order to study just because their videos are not following their study-plan.

I will try to study according to the study plan and make it more aligned. For example, i will not do EIGRP before RIP as their Video series does. I will just do the theory in advance based on the labs in their workbook because their workbook is more aligned with their study plan.

As a final note i would just like to say that this technique may not work for all. In fact it may only work for me, but it works really well for me! I personally strongly recommend a simular approach of studying. Mainly because in this way you will learn what you DON'T already know and that will be your weakest area. So it will Always be good to get that on paper so you know what you need to study instead of studying what you already know!

The complete way of studying that I am preparing for the CCIE RSv5 Blueprint is how Brian McGahan reordered it. It makes perfect sense. Most of the topics i'll post below are my study notes and you'll see that they follow this list very much. I've made some Changes mainly in the Security part because I prefer to be through-and-done with a topic rather than go back at a later stage. I then cross every topic out of the list as I've studied them. That doesn't mean that I am ready with them, just that I have at least a foundational knowledge about them. It also helps with knowing how long away the Lab date is!

This is the list:

RSv5 Expanded Blueprint

Color explanation

Red = Not studied topics. (meaning I have not studies these topics at CCIE Level)

Blue = Studied topics that I consider myself Confident enough in.

Dark Red = Studied topics but I feel that I need to improve on Before lab.

Black = Topics initially in the Blueprint but was later removed.

Striked = Topic from the CCIE RSv5 Blueprint that has been covered in studies.

3. IP Routing

..3.1. Protocol Independent IPv4 Routing

....3.1.1. IPv4 Addressing

....3.1.2. IPv4 ARP

....3.1.3. Longest Match Routing

....3.1.4. Administrative Distance

....3.1.5. Static Routing

....3.1.6. Route Recursion

....3.1.7. Egress Interface vs. Next Hop Static Routing

....3.1.8. Default Routing

....3.1.9. CEF

....3.1.10. Floating Static Routes

....3.1.11. Backup Interface

....3.1.12. IP Service Level Agreement

....3.1.13. Enhanced Object Tracking

....3.1.14. Policy Routing

....3.1.15. Policy Routing and IP SLA

....3.1.16. Local Policy Routing

....3.1.17. GRE Tunnels

....3.1.18. IP in IP Tunnels

....3.1.19. Tunnels & Recursive Routing Errors

....3.1.20. On Demand Routing

....3.1.21. VRF Lite

....3.1.22. Bidirectional Forwarding Detection

....3.1.23. Performance Routing (PfR) *

..3.2. Protocol Independent IPv6 Routing

....3.2.1. IPv6 Link-Local Addressing

....3.2.2. IPv6 Unique Local Addressing

....3.2.3. IPv6 Global Aggregatable Addressing

....3.2.4. IPv6 EUI-64 Addressing

....3.2.5. IPv6 Auto-Configuration / SLAAC

....3.2.6. IPv6 Global Prefix

....3.2.7. IPv6 Redistribution

....3.2.8. IPv6 Filtering

....3.2.9. IPv6 NAT-PT

....3.2.10. IPv6 MP-BGP

....3.2.11. IPv6 Tunneling *

....3.2.12. Automatic 6to4 Tunneling*

....3.2.13. ISATAP Tunneling *

..3.3. Common Dynamic Routing Features

....3.3.1. Distance Vector vs. Link State vs. Path Vector routing protocols

....3.3.2. Passive Interfaces

....3.3.3. Routing Protocol Authentication

....3.3.4. Route Filtering

....3.3.5. Auto Summarization

....3.3.6. Manual Summarization

....3.3.7. Route Redistribution

......3.3.7.1. Prefix Filtering with Route Tagging

......3.3.7.2. Prefix Filtering with Manual Lists

......3.3.7.3. Prefix Filtering with Administrative Distance

......3.3.7.4. Administrative Distance Based Loops

......3.3.7.5. Metric Based Loops

..3.4. RIP

....3.4.1. RIPv2

......3.4.1.1. Initialization

........3.4.1.1.1. Enabling RIPv2

........3.4.1.1.2. RIP Send and Receive Versions

........3.4.1.1.3. Split Horizon

........3.4.1.1.4. RIPv2 Unicast Updates

........3.4.1.1.5. RIPv2 Broadcast Updates

........3.4.1.1.6. RIPv2 Source Validation

......3.4.1.2. Path Selection

........3.4.1.2.1. Offset List

......3.4.1.3. Summarization

........3.4.1.3.1. Auto-Summary

........3.4.1.3.2. Manual Summarization

......3.4.1.4. Authentication

........3.4.1.4.1. Clear Text

........3.4.1.4.2. MD5

......3.4.1.5. Convergence Optimization & Scalability

........3.4.1.5.1. RIPv2 Convergence Timers

........3.4.1.5.2. RIPv2 Triggered Updates

......3.4.1.6. Filtering

........3.4.1.6.1. Filtering with Passive Interface

........3.4.1.6.2. Filtering with Prefix-Lists

........3.4.1.6.3. Filtering with Standard Access-Lists

........3.4.1.6.4. Filtering with Extended Access-Lists

........3.4.1.6.5. Filtering with Offset Lists

........3.4.1.6.6. Filtering with Administrative Distance

........3.4.1.6.7. Filtering with Per Neighbor AD

......3.4.1.7. Default Routing

........3.4.1.7.1. RIPv2 Default Routing

........3.4.1.7.2. RIPv2 Conditional Default Routing

........3.4.1.7.3. RIPv2 Reliable Conditional Default Routing

....3.4.2. RIPng *

......3.4.2.1. RIPng Overview *

3.5. EIGRP

3.5.1. Initialization

3.5.1.1. Network Statement

3.5.1.2. Multicast vs. Unicast Updates

3.5.1.3. EIGRP Named Mode

3.5.1.4. EIGRP Multi AF Mode

3.5.1.5. EIGRP Split Horizon

3.5.1.6. EIGRP Next-Hop Processing

3.5.2. Path Selection

3.5.2.1. Feasibility Condition

3.5.2.2. Modifying EIGRP Vector Attributes

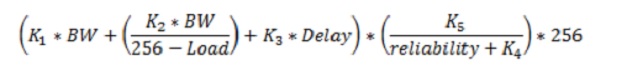

3.5.2.3. Classic Metric

3.5.2.4. Wide Metric

3.5.2.5. Metric Weights

3.5.2.6. Equal Cost Load Balancing

3.5.2.7. Unequal Cost Load Balancing

3.5.2.8. EIGRP Add-Path

3.5.3. Summarization

3.5.3.1. Auto-Summary

3.5.3.2. Manual Summarization

3.5.3.3. Summarization with Default Routing

3.5.3.4. Summarization with Leak Map

3.5.3.5. Summary Metric

3.5.4. Authentication 3.5.4.1. MD5

3.5.4.2. HMAC SHA2-256bit

3.5.4.3. Automatic key rollover

3.5.5. Convergence Optimization & Scalability

3.5.5.1. EIGRP Convergence Timers

3.5.5.2. EIGRP Query Scoping with Summarization

3.5.5.3. EIGRP Query Scoping with Stub Routing

3.5.5.4. Stub Routing with Leak Map

3.5.5.5. Bandwidth Pacing

3.5.5.6. IP FRR

3.5.5.7. Graceful Restart & NSF

3.5.6. Filtering

3.5.6.1. Filtering with Passive Interface

3.5.6.2. Filtering with Prefix-Lists

3.5.6.3. Filtering with Standard Access-Lists

3.5.6.4. Filtering with Extended Access-Lists

3.5.6.5. Filtering with Offset Lists

3.5.6.6. Filtering with Administrative Distance

3.5.6.7. Filtering with Per Neighbor AD

3.5.6.8. Filtering with Route Maps

3.5.6.9. Per Neighbor Prefix Limit

3.5.6.10. Redistribution Prefix Limit

3.5.7. Miscellaneous EIGRP

3.5.7.1. EIGRP Default Network

3.5.7.2. EIGRP Default Metric

3.5.7.3. EIGRP Neighbor Logging

3.5.7.4. EIGRP Router-ID

3.5.7.5. EIGRP Maximum Hops

3.5.7.6. no next-hop-self no-ecmp-mode

3.5.7.7. EIGRP Route Tag Enhancements

3.5.8. EIGRPv6

3.5.8.1. Enabling EIGRPv6

3.5.8.2. EIGRPv6 Split Horizon

3.5.8.3. EIGRPv6 Next-Hop Processing

3.5.8.4. EIGRPv6 Authentication

3.5.8.5. EIGRPv6 Metric Manipulation

3.5.8.6. EIGRPv6 Default Routing

3.5.8.7. EIGRPv6 Summarization

3.5.8.8. EIGRPv6 Prefix Filtering

3.5.8.9. EIGRPv6 Stub Routing

3.5.8.10. EIGRPv6 Link Bandwidth

3.5.8.11. EIGRPv6 Timers

3.5.8.12. EIGRP IPv6 VRF Lite

3.5.8.13. EIGRP Over The Top

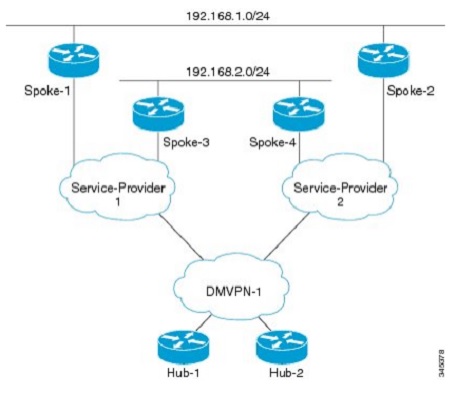

3.6. OSPF 3.6.1. Initialization 3.6.1.1. Network Statement

3.6.1.2. Interface Statement

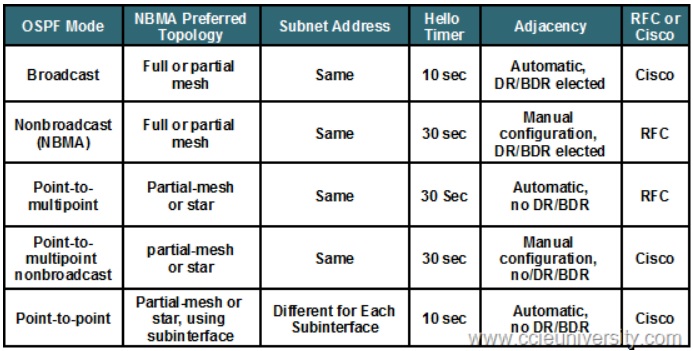

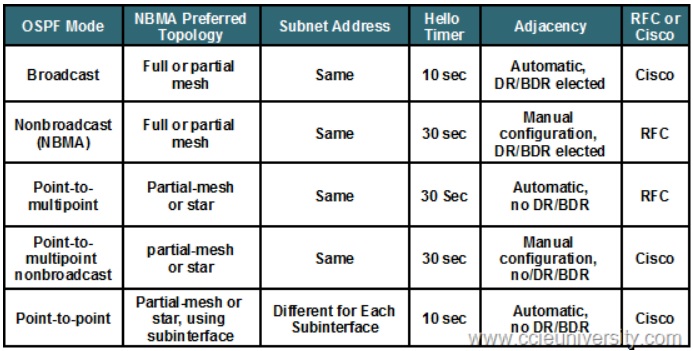

3.6.2. Network Types 3.6.2.1. Broadcast

3.6.2.2. Non-Broadcast

3.6.2.3. OSPF DR/BDR Election Manipulation

3.6.2.4. Point-to-Point

3.6.2.5. Point-to-Multipoint

3.6.2.6. Point-to-Multipoint Non-Broadcast

3.6.2.7. Loopback

3.6.2.8. LSA Types

3.6.2.9. OSPF Next-Hop Processing

3.6.2.10. Unicast vs. Multicast Hellos

3.6.3. Path Selection 3.6.3.1. Auto-Cost

3.6.3.2. Cost

3.6.3.3. Bandwidth

3.6.3.4. Per-Neighbor Cost

3.6.3.5. Non-Backbone Transit Areas

3.6.3.6. Virtual-Links

3.6.4. Authentication 3.6.4.1. Area

3.6.4.2. Interface level

3.6.4.3. Clear Text

3.6.4.4. MD5

3.6.4.5. Null

3.6.4.6. MD5 with Multiple Keys

3.6.4.7. SHA1-196

3.6.4.8. Virtual link

3.6.5. Summarization 3.6.5.1. Internal Summarization

3.6.5.2. External Summarization

3.6.5.3. Path Selection with Summarization

3.6.5.4. Summarization and Discard Routes

3.6.6. Stub Areas 3.6.6.1. Stub Areas

3.6.6.2. Totally Stubby Areas

3.6.6.3. Not-So-Stubby Areas

3.6.6.4. Not-So-Stubby Areas and Default Routing

3.6.6.5. Not-So-Totally-Stubby Areas

3.6.6.6. Stub Areas with Multiple Exit Points

3.6.6.7. NSSA Type-7 to Type-5 Translator Election

3.6.6.8. NSSA Redistribution Filtering

3.6.7. Filtering 3.6.7.1. Filtering with Distribute-Lists

3.6.7.2. Filtering with Administrative Distance

3.6.7.3. Filtering with Route-Maps

3.6.7.4. Filtering with Summarization

3.6.7.5. LSA Type-3 Filtering

3.6.7.6. Forwarding Address Suppression

3.6.7.7. NSSA ABR External Prefix Filtering

3.6.7.8. Database Filtering

3.6.8. Default Routing 3.6.8.1. Default Routing

3.6.8.2. Conditional Default Routing

3.6.8.3. Reliable Conditional Default Routing

3.6.8.4. Default Cost

3.6.9. Convergence Optimization & Scalability 3.6.9.1. Interface Timers

3.6.9.2. Fast Hellos

3.6.9.3. LSA & SPF Throttling

3.6.9.4. LSA & SPF Pacing

3.6.9.5. Single Hop LFA / IP FRR

3.6.9.6. Multihop LFA

3.6.9.7. Stub Router Advertisement

3.6.9.8. Demand Circuit

3.6.9.9. Flooding Reduction

3.6.9.10. Transit Prefix Filtering

3.6.9.11. Resource Limiting

3.6.9.12. Graceful Restart & NSF

3.6.9.13. Incremental SPF

3.6.10. Miscellaneous OSPF Features

3.6.11. OSPFv3 3.6.11.1. LSA Types

3.6.11.2. OSPFv3

3.6.11.3. OSPFv3 Network Types

3.6.11.4. OSPFv3 Prefix Suppression

3.6.11.5. OSPFv3 Virtual Links

3.6.11.6. OSPFv3 Summarization

3.6.11.7. OSPFv3 IPsec Authentication

3.6.11.8. OSPFv3 Multi AF Mode

3.6.11.9. TTL Security

3.7. BGP 3.7.1. Establishing Peerings 3.7.1.1. iBGP Peerings

3.7.1.2. EBGP Peerings

3.7.1.3. Update Source Modification

3.7.1.4. Multihop EBGP Peerings

3.7.1.5. Neighbor Disable-Connected-Check

3.7.1.6. Authentication

3.7.1.7. TTL Security

3.7.1.8. BGP Peer Groups

3.7.1.9. 4 Byte ASNs

3.7.1.10. Active vs. Passive Peers

3.7.1.11. Path MTU Discovery

3.7.1.12. Multi Session TCP Transport per AF

3.7.1.13. Dynamic BGP Peering

3.7.2. iBGP Scaling 3.7.2.1. Route Reflectors

3.7.2.2. Route Reflector Clusters

3.7.2.3. Confederations

3.7.3. BGP Next Hop Processing 3.7.3.1. Next-Hop-Self

3.7.3.2. Manual Next-Hop Modification

3.7.3.3. Third Party Next Hop

3.7.3.4. Next Hop Tracking

3.7.3.5. Conditional Next Hop Tracking

3.7.3.6. BGP Next-Hop Trigger Delay

3.7.4. BGP NLRI Origination 3.7.4.1. Network Statement

3.7.4.2. Redistribution

3.7.4.3. BGP Redistribute Internal

3.7.4.4. Conditional Advertisement

3.7.4.5. Conditional Route Injection

3.7.5. BGP Bestpath Selection 3.7.5.1. Weight

3.7.5.2. Local Preference

3.7.5.3. AS-Path Prepending

3.7.5.4. Origin

3.7.5.5. MED

3.7.5.6. Always Compare MED

3.7.5.7. Deterministic MED

3.7.5.8. AS-Path Ignore

3.7.5.9. Router-IDs

3.7.5.10. DMZ Link Bandwidth

3.7.5.11. Maximum AS Limit

3.7.5.12. Multipath

3.7.6. BGP Aggregation 3.7.6.1. BGP Auto-Summary

3.7.6.2. Aggregation

3.7.6.3. Summary Only

3.7.6.4. Suppress Map

3.7.6.5. Unsuppress Map

3.7.6.6. AS-Set

3.7.6.7. Attribute-Map

3.7.6.8. Advertise Map

3.7.7. BGP Communities 3.7.7.1. Standard

3.7.7.2. Extended

3.7.7.3. No-Advertise

3.7.7.4. No-Export

3.7.7.5. Local-AS

3.7.7.6. Deleting

3.7.8. Filtering 3.7.8.1. Prefix-Lists

3.7.8.2. Standard Access-Lists Task

3.7.8.3. Extended Access-Lists

3.7.8.4. Maximum Prefix

3.7.8.5. BGP Regular Expressions

3.7.8.6. Outbound Route Filtering (ORF)

3.7.8.7. Soft Reconfiguration Inbound

3.7.9. AS-Path Manipulation 3.7.9.1. Local AS

3.7.9.2. Local AS Replace-AS/Dual-AS

3.7.9.3. Remove Private AS

3.7.9.4. Allow AS In

3.7.9.5. AS Override

3.7.10. BGP Convergence Optimization 3.7.10.1. BGP Timers Tuning

3.7.10.2. BGP Fast Fallover

3.7.10.3. BGP Prefix Independent Convergence (PIC)

3.7.10.4. BGP Dampening

3.7.10.5. BGP Dampening with Route-Map

3.7.10.6. BGP Add Path

3.7.11. BGP Default Routing

3.7.12. IPv6 BGP

3.7.13. Misc BGP 3.7.13.1. iBGP Synchronization

3.7.13.2. BGP over GRE

3.7.13.3. BGP Backdoor

3.8. Route Redistribution 3.8.1. Metric Based Loops

3.8.2. Administrative Distance Based Loops

3.8.3. Route Tag Filtering

3.8.4. IP Route Profile

3.8.5. Debug IP Routing

3.9. Miscellaneous Routing Features

3.10. IS-IS *

4. VPN

4.1. MPLS 4.1.1. VRF Lite

4.1.2. MPLS LDP

4.1.3. MPLS Ping

4.1.4. MPLS Traceroute

4.1.5. MPLS Label Filtering

4.1.6. MP-BGP VPNv4

4.1.7. MP-BGP Prefix Filtering

4.1.8. PE-CE Routing with RIP

4.1.9. PE-CE Routing with OSPF

4.1.10. OSPF Sham-Link

4.1.11. PE-CE Routing with EIGRP

4.1.12. EIGRP Site-of-Origin

4.1.13. PE-CE Routing with BGP

4.1.14. BGP SoO Attribute

4.1.15. Internet Access

4.1.16. Route Leaking

4.1.17. MPLS VPN Performance Tuning

4.1.18. AToM *

4.1.19. L2TPV3 *

4.1.20. VPLS *

4.2. IPsec LAN-to-LAN 4.2.1. ISAKMP Policies

4.2.2. PSK Authentication

4.2.3. Static Crypto Maps

4.2.4. IPsec over GRE

4.2.5. Static VTI

4.2.6. GETVPN *

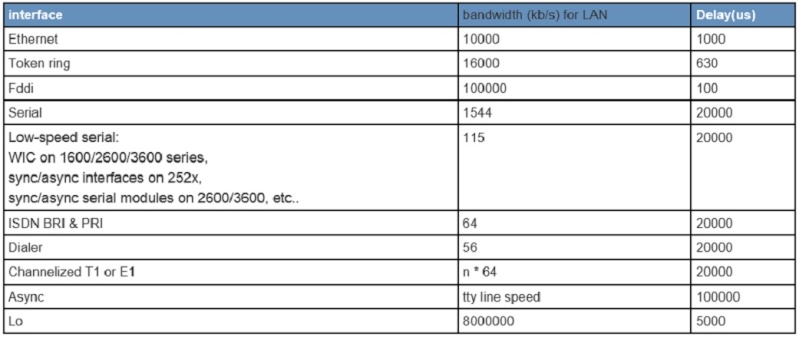

4.3. DMVPN 4.3.1. Single Hub

4.3.2. NHRP

4.3.3. DMVPN Phase 1, 2, & 3

4.3.4. QoS Profiles

4.3.5. QoS Pre-Classify

5. Multicast

5.1. Layer 2 Multicast 5.1.1. IGMPv1, IGMPv2, IGMPv3

5.1.2. IGMP Snooping

5.1.3. IGMP Querier Election

5.1.4. IGMP Filtering

5.1.5. IGMP Proxy

5.1.6. IGMP Timers

5.1.7. Multicast VLAN Registration

5.1.8. IGMP Profiles

5.2. IPv4 Multicast Routing 5.2.1. PIM Dense Mode

5.2.2. PIM Sparse Mode

5.2.3. PIM Sparse Dense Mode

5.2.4. Static RP

5.2.5. Auto-RP 5.2.5.1. Auto-RP

5.2.5.2. Sparse Dense Mode

5.2.5.3. Auto-RP Listener

5.2.5.4. Multiple Candidate RPs

5.2.5.5. Filtering Candidate RPs

5.2.5.6. RP & MA placement problems

5.2.6. Bootstrap Router 5.2.6.1. BSR

5.2.6.2. Multiple RP Candidates

5.2.6.3. Multiple BSR Candidates

5.2.7. Source Specific Multicast

5.2.8. Bidirectional PIM

5.2.9. Group to RP Mapping

5.2.10. Anycast RP

5.2.11. MSDP

5.2.12. MSDP SA Filtering

5.2.13. Multicast TTL Scoping

5.2.14. Auto-RP & BSR Boundary Filtering

5.2.15. PIM Accept Register Filtering

5.2.16. PIM Accept RP Filtering

5.2.17. RPF Failure

5.2.18. Registration Failure

5.2.19. PIM DR Election

5.2.20. PIM DF Election

5.2.21. PIM Assert

5.2.22. Static Multicast Routes

5.2.23. Multicast BGP

5.2.24. PIM NBMA Mode

5.2.25. Multicast over GRE

5.2.26. Stub Multicast Routing

5.2.27. Multicast Helper Map

5.2.28. Multicast Rate Limiting

5.2.29. Multicast BGP

5.3. IPv6 Multicast Routing * 5.3.1. IPv6 PIM and MLD *

5.3.2. IPv6 PIM BSR *

5.3.3. IPv6 Embedded RP *

5.3.4. IPv6 SSM *

6. QoS

6.1. Hold-Queue and Tx-Ring

6.2. Weighted Fair Queuing (WFQ)

6.3. Selective Packet Discard

6.4. Payload Compression on Serial Links

6.5. Generic TCP/UDP Header Compression

6.6. MLP Link Fragmentation and Interleaving

6.7. MQC Classification and Marking

6.8. MQC Bandwidth Reservations and CBWFQ

6.9. MQC Bandwidth Percent

6.10. MQC LLQ and Remaining Bandwidth Reservations

6.11. MQC WRED

6.12. MQC Dynamic Flows and WRED

6.13. MQC WRED with ECN

6.14. MQC Class-Based Generic Traffic Shaping

6.15. MQC Class-Based GTS and CBWFQ

6.16. MQC Single-Rate Three-Color Policer

6.17. MQC Hierarchical Policers

6.18. MQC Two-Rate Three-Color Policer

6.19. MQC Peak Shaping

6.20. MQC Percent-Based Policing

6.21. MQC Header Compression

6.22. Voice Adaptive Traffic Shaping

6.23. Voice Adaptive Fragmentation

6.24. Advanced HTTP Classification with NBAR

6.22. Layer 2 QoS *

7. Security

7.1. Layer 2 Security 7.1.1. Port Protection

7.1.2. Private VLANs

7.1.3. Port Based ACLs

7.1.4. VLAN ACLs for IP Traffic

7.1.5. VLAN ACLs for Non-IP Traffic

7.1.6. Storm Control

7.1.7. Port Security

7.1.8. HSRP and Port-Security

7.1.9. ErrDisable Recovery

7.1.10. DHCP Snooping

7.1.11. DHCP Snooping and the Information Option

7.1.12. Dynamic ARP Inspection

7.1.13. IP Source Guard

7.1.14. 802.1x *

7.2. Management Plane Security 7.2.1. AAA Authentication Lists

7.2.2. AAA Exec Authorization

7.2.3. AAA Local Command Authorization

7.2.4. Controlling Terminal Line Access

7.2.5. IOS Login Enhancements

7.2.6. IOS Resilient Configuration

7.2.7. Role-Based CLI

7.2.8. AAA with TACACS+ and RADIUS *

7.3. Control Plane Security 7.3.1. Controlling the ICMP Messages Rate

7.3.2. Control Plane Policing

7.3.3. Control Plane Protection (CPPr)

7.3.4. Control Plane Host

7.4. Data Plane Security 7.4.1. Traffic Filtering Using Standard Access-Lists

7.4.2. Traffic Filtering Using Extended Access-Lists

7.4.3. Traffic Filtering Using Reflexive Access-Lists

7.4.4. IPv6 Traffic Filter

7.4.5. Filtering Fragmented Packets

7.4.6. Filtering Packets with Dynamic Access-Lists

7.4.7. Filtering Traffic with Time-Based Access Lists

7.4.8. Traffic Filtering with Policy-Based Routing

7.4.9. Preventing Packet Spoofing with uRPF

7.4.10. Using NBAR for Content-Based Filtering

7.4.11. TCP Intercept

7.4.12. TCP Intercept Watch Mode

7.4.13. Packet Logging with Access-Lists

7.4.14. IP Source Tracker

7.4.15. Router IP Traffic Export (RITE)

7.4.16. IOS ACL Selective IP Option Drop

7.4.17. Flexible Packet Matching

7.4.18. IPv6 First Hop Security 7.4.18.1. RA guard

7.4.18.2. DHCP guard

7.4.18.3. Binding table

7.4.18.4. Device tracking

7.4.18.5. ND inspection/snooping

7.4.18.6. Source guard

7.4.18.7. PACL

8. System Management

8.1. Device Management 8.1.1. Console

8.1.2. Telnet 8.1.2.1. Telnet Service Options

8.1.3. SSH

8.1.4. Terminal Line Settings

8.1.5. HTTP Server and Client

8.1.6. FTP Server and Client

8.1.7. TFTP Server and Client

8.1.8. SNMP 8.1.8.1. SNMPv2 Server

8.1.8.2. SNMPv2c Access Control

8.1.8.3. SNMP Traps and Informs

8.1.8.4. CPU and Memory Thresholds

8.1.8.5. SNMPv3

8.1.8.6. SNMP MAC Address Notifications

8.1.8.7. SNMP Notifications of Syslog Messages

8.2. Logging 8.2.1. System Message Logging

8.2.2. Syslog Logging

8.2.3. Logging Counting and Timestamps

8.2.4. Logging to Flash Memory

8.2.5. Configuration Change Notification and Logging

8.2.6. Configuration Archive and Rollback

8.2.7. Logging with Access-Lists

8.3. NTP 8.3.1. NTP

8.3.2. NTP Authentication

8.3.3. NTP Access Control

8.3.4. NTP Version 3 & 4

8.4. EEM 8.4.1. KRON Command Schedule

8.4.2. EEM Scripting: Interface Events

8.4.3. EEM Scripting: Syslog Events

8.4.4. EEM Scripting: CLI Events

8.4.5. EEM Scripting: Periodic Scheduling

8.4.6. EEM Scripting: Advanced Features

8.4.7. EEM Applets

8.5. Miscellaneous System Management 8.5.1. Auto-Install over LAN Interfaces using DHCP

8.5.2. Auto-Install over LAN Interfaces Using RARP

8.5.3. IOS Menus

8.5.4. IOS Banners

8.5.5. Exec Aliases

8.5.6. TCP Keepalives

8.5.7. Generating Exception Core Dumps

8.5.8. Conditional Debugging

8.5.9. Tuning Packet Buffers

8.5.10. CDP

8.5.11. Remote Shell

9. Network Services

9.1. Object Tracking 9.1.1. IP SLA

9.1.2. Enhanced Object Tracking

9.1.3. Tracking Lists

9.2. First Hop Redundancy Protocols 9.2.1. HSRP

9.2.2. VRRP

9.2.3. GLBP

9.2.4. Router Redundancy and Object Tracking

9.2.5. IPv6 RS & RA Redundancy

9.3. DHCP 9.3.1. DHCP Server

9.3.2. DHCP Client

9.3.3. DHCP Relay

9.3.4. DHCP Host Pools

9.3.5. DHCP On-Demand Pool

9.3.6. DHCP Proxy

9.3.7. DHCP Information Option

9.3.8. DHCP Authorized ARP

9.3.9. SLAAC/DHCPv6 interaction

9.3.10. Stateful & Stateless DHCPv6

9.3.11. DHCPv6 prefix delegation

9.4. DNS 9.4.1. IOS Authoritative DNS Server

9.4.2. IOS Caching DNS Server

9.4.3. IOS DNS Spoofing

9.5. NAT 9.5.1. Basic NAT

9.5.2. NAT Overload

9.5.3. NAT with Route Maps

9.5.4. Static NAT

9.5.5. Static PAT

9.5.6. Static NAT and IP Aliasing

9.5.7. Static Policy NAT

9.5.8. NAT with Overlapping Subnets

9.5.9. TCP Load Distribution with NAT

9.5.10. Stateful NAT with HSRP

9.5.11. Stateful NAT with Primary/Backup

9.5.12. NAT Virtual Interface

9.5.13. NAT Default Interface

9.5.14. Reversible NAT

9.5.15. Static Extendable NAT

9.5.16. NAT ALG

9.6. Traffic Accounting 9.6.1. IP Precedence Accounting

9.6.2. IP Output Packet Accounting

9.6.3. IP Access Violation Accounting

9.6.4. MAC Address Accounting

9.7. NetFlow 9.7.1. Netflow v5 & v9

9.7.2. Netflow Ingress and Egress

9.7.3. Netflow Top Talkers

9.7.4. Netflow Aggregation Cache

9.7.5. Netflow Random Sampling

9.7.6. Netflow Input Filters

9.7.7. Netflow Export

9.8. Miscellaneous Network Services 9.8.1. Proxy ARP

9.8.2. IRDP

9.8.3. Router ICMP Settings 9.8.3.1. TCP Optimization

9.8.4. IOS Small Services and Finger

9.8.5. Directed Broadcasts and UDP Forwarding

9.8.6. NBAR Protocol Discovery

9.8.7. IP Event Dampening

9.8.8. Conditional Debugging

9.8.9. Embedded Packet Capture

9.8.10. Interpreting Packet Captures

All-in-all though I would say that i'm roughly 50% through with my studies Before I will book the exam. So it's still a long journey! But I recently got asked how to prepare for the exam at Another Place so i will just add it in here as well for the information. So i quote:

Daniel Larsson wrote:I do get asked a lot of questions of how to prepare for exams or how to best learn a specific topic. I am slowly (too slowly) preparing myself for the RSv5 Lab exam and someone might find it interesting to know how to prepare for an exam like that.

So someone might find this interesting, but this is actually how I am currently studying for both CCDP and the CCIE RSv5 Lab. I find this technique to work very well for me and it makes sure I don't oversee any small details or any topic that I haven't heard of before.

This is a copy-and-paste from my CCIE Study-nots document, so mind the words used ;-).

While studying for the CCIE i tend to write a lot in my study-document to help with the learning process and to keep me motivated. But basically this is how I study and I quote my "little" document:

General study technique that eventually will make me a master of the CCIE RSv5 topics.

In the beginning i thought that i could apply the Layer 2 study technique to Layer 3 studies as well. It worked for some topics while others were impossible to find on the Cisco-website or in any configuration guide at all.

So i turned to using the same approach and use the configuration and technology explanations of the IOS 15.3T release for topics that could be found, and other theory resources for topics that was not on the cisco-website.

My general three basic steps are:

- -First, read through the configuration and technology explanations on the 15.3T IOS release if there is any for the specific topic in the blueprint. If there are none, go to other sources such as RFC's, Forums, CCIE blogs, Cisco-books and study the topic theory.

- -Secondly, watch INE CCIE videos about the topics to learn more in depth. I've noticed that their video-series does not follow their own re-ordered study-plan of the CCIE RSv5 blueprints. So I will watch the videos not in the order they come but rather in the order they align with the study-plan.

- -And lastly, lab it up using INE CCIE RSv5 topology and using their CCIE Workbook. Most topics are covered in their Workbook. However for some topics that I might not feel confident enough in i will create my own labs to verify the theory.

Repeat this for each and every topic that is in the CCIE RSv5 LAB blueprint. Layer 3 technologies consists of most part of the CCIE LAB so it's going to take a lot of time to prepare for this! Estimated the Layer 3 configuration is about 85% of the scope of the exam.

As a preparation for CCIE i have also noted my preparation time in this document listed by a per-technology basis. It will keep me reminded about how much time is invested in each topic in case i need to go back and study some more.

I will approach this lab by learning from scratch, meaning that I will read all design documents and all release notes about all the technologies that are listed in the CCIE blueprint just to be sure I at least have a decent chance of remembering reading about it in case i get tested on it during the lab. This also means that i may read a lot of basic-non CCIE level of theory to just see if I've missed anything.

For instance, i feel extremely confident in general routing such as (EIGRP, OSPF, BGP, RIP, Static) but I will still read through every "core" document by cisco on the technologies I can be tested on.

Note to self: Although my plan is to use the INE Study plan that Brian McGahan reordered for the CCIE RSv5 Lab blueprint i will have to make changes to the study plan and in which order to study just because their videos are not following their study-plan.

I will try to study according to the study plan and make it more aligned. For example, i will not do EIGRP before RIP as their Video series does. I will just do the theory in advance based on the labs in their workbook because their workbook is more aligned with their study plan.

As a final note i would just like to say that this technique may not work for all. In fact it may only work for me, but it works really well for me! I personally strongly recommend a simular approach of studying. Mainly because in this way you will learn what you DON'T already know and that will be your weakest area. So it will Always be good to get that on paper so you know what you need to study instead of studying what you already know!

The complete way of studying that I am preparing for the CCIE RSv5 Blueprint is how Brian McGahan reordered it. It makes perfect sense. Most of the topics i'll post below are my study notes and you'll see that they follow this list very much. I've made some Changes mainly in the Security part because I prefer to be through-and-done with a topic rather than go back at a later stage. I then cross every topic out of the list as I've studied them. That doesn't mean that I am ready with them, just that I have at least a foundational knowledge about them. It also helps with knowing how long away the Lab date is!

This is the list:

RSv5 Expanded Blueprint

Color explanation

Red = Not studied topics. (meaning I have not studies these topics at CCIE Level)

Blue = Studied topics that I consider myself Confident enough in.

Dark Red = Studied topics but I feel that I need to improve on Before lab.

Black = Topics initially in the Blueprint but was later removed.

3. IP Routing

..3.1. Protocol Independent IPv4 Routing

....3.1.2. IPv4 ARP

....3.1.3. Longest Match Routing

....3.1.4. Administrative Distance

....3.1.5. Static Routing

....3.1.6. Route Recursion

....3.1.7. Egress Interface vs. Next Hop Static Routing

....3.1.8. Default Routing

....3.1.9. CEF

....3.1.10. Floating Static Routes

....3.1.11. Backup Interface

....3.1.12. IP Service Level Agreement

....3.1.13. Enhanced Object Tracking

....3.1.14. Policy Routing

....3.1.15. Policy Routing and IP SLA

....3.1.16. Local Policy Routing

....3.1.17. GRE Tunnels

....3.1.18. IP in IP Tunnels

....3.1.19. Tunnels & Recursive Routing Errors

....3.1.20. On Demand Routing

....3.1.21. VRF Lite

....3.1.22. Bidirectional Forwarding Detection

....3.1.23. Performance Routing (PfR) *

..3.2. Protocol Independent IPv6 Routing

....3.2.1. IPv6 Link-Local Addressing

....3.2.2. IPv6 Unique Local Addressing

....3.2.3. IPv6 Global Aggregatable Addressing

....3.2.4. IPv6 EUI-64 Addressing

....3.2.5. IPv6 Auto-Configuration / SLAAC

....3.2.6. IPv6 Global Prefix

....3.2.7. IPv6 Redistribution

....3.2.8. IPv6 Filtering

....3.2.9. IPv6 NAT-PT

....3.2.10. IPv6 MP-BGP

....3.2.11. IPv6 Tunneling *

....3.2.12. Automatic 6to4 Tunneling*

....3.2.13. ISATAP Tunneling *

....3.3.1. Distance Vector vs. Link State vs. Path Vector routing protocols

....3.3.2. Passive Interfaces

....3.3.3. Routing Protocol Authentication

....3.3.4. Route Filtering

....3.3.5. Auto Summarization

....3.3.6. Manual Summarization

....3.3.7. Route Redistribution

......3.3.7.1. Prefix Filtering with Route Tagging

......3.3.7.2. Prefix Filtering with Manual Lists

......3.3.7.3. Prefix Filtering with Administrative Distance

......3.3.7.4. Administrative Distance Based Loops

......3.3.7.5. Metric Based Loops

..3.4. RIP

......3.4.1.1. Initialization

........3.4.1.1.1. Enabling RIPv2

........3.4.1.1.2. RIP Send and Receive Versions

........3.4.1.1.3. Split Horizon

........3.4.1.1.4. RIPv2 Unicast Updates

........3.4.1.1.5. RIPv2 Broadcast Updates

........3.4.1.1.6. RIPv2 Source Validation

......3.4.1.2. Path Selection

........3.4.1.2.1. Offset List

......3.4.1.3. Summarization

........3.4.1.3.1. Auto-Summary

........3.4.1.3.2. Manual Summarization

......3.4.1.4. Authentication

........3.4.1.4.1. Clear Text

........3.4.1.4.2. MD5

......3.4.1.5. Convergence Optimization & Scalability

........3.4.1.5.1. RIPv2 Convergence Timers

........3.4.1.5.2. RIPv2 Triggered Updates

......3.4.1.6. Filtering

........3.4.1.6.1. Filtering with Passive Interface

........3.4.1.6.2. Filtering with Prefix-Lists

........3.4.1.6.3. Filtering with Standard Access-Lists

........3.4.1.6.4. Filtering with Extended Access-Lists

........3.4.1.6.5. Filtering with Offset Lists

........3.4.1.6.6. Filtering with Administrative Distance

........3.4.1.6.7. Filtering with Per Neighbor AD

......3.4.1.7. Default Routing

........3.4.1.7.1. RIPv2 Default Routing

........3.4.1.7.2. RIPv2 Conditional Default Routing

........3.4.1.7.3. RIPv2 Reliable Conditional Default Routing

....3.4.2. RIPng *

......3.4.2.1. RIPng Overview *

3.5. EIGRP

3.5.1. Initialization

3.5.1.1. Network Statement

3.5.1.2. Multicast vs. Unicast Updates

3.5.1.3. EIGRP Named Mode

3.5.1.4. EIGRP Multi AF Mode

3.5.1.5. EIGRP Split Horizon

3.5.1.6. EIGRP Next-Hop Processing

3.5.2. Path Selection

3.5.2.1. Feasibility Condition

3.5.2.2. Modifying EIGRP Vector Attributes

3.5.2.3. Classic Metric

3.5.2.4. Wide Metric

3.5.2.5. Metric Weights

3.5.2.6. Equal Cost Load Balancing

3.5.2.7. Unequal Cost Load Balancing

3.5.2.8. EIGRP Add-Path

3.5.3. Summarization

3.5.3.1. Auto-Summary

3.5.3.2. Manual Summarization

3.5.3.3. Summarization with Default Routing

3.5.3.4. Summarization with Leak Map

3.5.3.5. Summary Metric

3.5.4. Authentication 3.5.4.1. MD5

3.5.4.2. HMAC SHA2-256bit

3.5.4.3. Automatic key rollover

3.5.5. Convergence Optimization & Scalability

3.5.5.1. EIGRP Convergence Timers

3.5.5.2. EIGRP Query Scoping with Summarization

3.5.5.3. EIGRP Query Scoping with Stub Routing

3.5.5.4. Stub Routing with Leak Map

3.5.5.5. Bandwidth Pacing

3.5.5.6. IP FRR

3.5.5.7. Graceful Restart & NSF

3.5.6. Filtering

3.5.6.1. Filtering with Passive Interface

3.5.6.2. Filtering with Prefix-Lists

3.5.6.3. Filtering with Standard Access-Lists

3.5.6.4. Filtering with Extended Access-Lists

3.5.6.5. Filtering with Offset Lists

3.5.6.6. Filtering with Administrative Distance

3.5.6.7. Filtering with Per Neighbor AD

3.5.6.8. Filtering with Route Maps

3.5.6.9. Per Neighbor Prefix Limit

3.5.6.10. Redistribution Prefix Limit

3.5.7. Miscellaneous EIGRP

3.5.7.1. EIGRP Default Network

3.5.7.2. EIGRP Default Metric

3.5.7.3. EIGRP Neighbor Logging

3.5.7.4. EIGRP Router-ID

3.5.7.5. EIGRP Maximum Hops

3.5.7.6. no next-hop-self no-ecmp-mode

3.5.7.7. EIGRP Route Tag Enhancements

3.5.8. EIGRPv6

3.5.8.1. Enabling EIGRPv6

3.5.8.2. EIGRPv6 Split Horizon

3.5.8.3. EIGRPv6 Next-Hop Processing

3.5.8.4. EIGRPv6 Authentication

3.5.8.5. EIGRPv6 Metric Manipulation

3.5.8.6. EIGRPv6 Default Routing

3.5.8.7. EIGRPv6 Summarization

3.5.8.8. EIGRPv6 Prefix Filtering

3.5.8.9. EIGRPv6 Stub Routing

3.5.8.10. EIGRPv6 Link Bandwidth

3.5.8.11. EIGRPv6 Timers

3.5.8.12. EIGRP IPv6 VRF Lite

3.5.8.13. EIGRP Over The Top

3.6. OSPF 3.6.1. Initialization 3.6.1.1. Network Statement

3.6.1.2. Interface Statement

3.6.2. Network Types 3.6.2.1. Broadcast

3.6.2.2. Non-Broadcast

3.6.2.3. OSPF DR/BDR Election Manipulation

3.6.2.4. Point-to-Point

3.6.2.5. Point-to-Multipoint

3.6.2.6. Point-to-Multipoint Non-Broadcast

3.6.2.7. Loopback

3.6.2.8. LSA Types

3.6.2.9. OSPF Next-Hop Processing

3.6.2.10. Unicast vs. Multicast Hellos

3.6.3. Path Selection 3.6.3.1. Auto-Cost

3.6.3.2. Cost

3.6.3.3. Bandwidth

3.6.3.4. Per-Neighbor Cost

3.6.3.5. Non-Backbone Transit Areas

3.6.3.6. Virtual-Links

3.6.4. Authentication 3.6.4.1. Area

3.6.4.2. Interface level

3.6.4.3. Clear Text

3.6.4.4. MD5

3.6.4.5. Null

3.6.4.6. MD5 with Multiple Keys

3.6.4.7. SHA1-196

3.6.4.8. Virtual link

3.6.5. Summarization 3.6.5.1. Internal Summarization

3.6.5.2. External Summarization

3.6.5.3. Path Selection with Summarization

3.6.5.4. Summarization and Discard Routes

3.6.6. Stub Areas 3.6.6.1. Stub Areas

3.6.6.2. Totally Stubby Areas

3.6.6.3. Not-So-Stubby Areas

3.6.6.4. Not-So-Stubby Areas and Default Routing

3.6.6.5. Not-So-Totally-Stubby Areas

3.6.6.6. Stub Areas with Multiple Exit Points

3.6.6.7. NSSA Type-7 to Type-5 Translator Election

3.6.6.8. NSSA Redistribution Filtering

3.6.7. Filtering 3.6.7.1. Filtering with Distribute-Lists

3.6.7.2. Filtering with Administrative Distance

3.6.7.3. Filtering with Route-Maps

3.6.7.4. Filtering with Summarization

3.6.7.5. LSA Type-3 Filtering

3.6.7.6. Forwarding Address Suppression

3.6.7.7. NSSA ABR External Prefix Filtering

3.6.7.8. Database Filtering

3.6.8. Default Routing 3.6.8.1. Default Routing

3.6.8.2. Conditional Default Routing

3.6.8.3. Reliable Conditional Default Routing

3.6.8.4. Default Cost

3.6.9. Convergence Optimization & Scalability 3.6.9.1. Interface Timers

3.6.9.2. Fast Hellos

3.6.9.3. LSA & SPF Throttling

3.6.9.4. LSA & SPF Pacing

3.6.9.5. Single Hop LFA / IP FRR

3.6.9.6. Multihop LFA

3.6.9.7. Stub Router Advertisement

3.6.9.8. Demand Circuit

3.6.9.9. Flooding Reduction

3.6.9.10. Transit Prefix Filtering

3.6.9.11. Resource Limiting

3.6.9.12. Graceful Restart & NSF

3.6.9.13. Incremental SPF

3.6.10. Miscellaneous OSPF Features

3.6.11. OSPFv3 3.6.11.1. LSA Types

3.6.11.2. OSPFv3

3.6.11.3. OSPFv3 Network Types

3.6.11.4. OSPFv3 Prefix Suppression

3.6.11.5. OSPFv3 Virtual Links

3.6.11.6. OSPFv3 Summarization

3.6.11.7. OSPFv3 IPsec Authentication

3.6.11.8. OSPFv3 Multi AF Mode

3.6.11.9. TTL Security

3.7. BGP 3.7.1. Establishing Peerings 3.7.1.1. iBGP Peerings

3.7.1.2. EBGP Peerings

3.7.1.3. Update Source Modification

3.7.1.4. Multihop EBGP Peerings

3.7.1.5. Neighbor Disable-Connected-Check

3.7.1.6. Authentication

3.7.1.7. TTL Security

3.7.1.8. BGP Peer Groups

3.7.1.9. 4 Byte ASNs

3.7.1.10. Active vs. Passive Peers

3.7.1.11. Path MTU Discovery

3.7.1.12. Multi Session TCP Transport per AF

3.7.1.13. Dynamic BGP Peering

3.7.2. iBGP Scaling 3.7.2.1. Route Reflectors

3.7.2.2. Route Reflector Clusters

3.7.2.3. Confederations

3.7.3. BGP Next Hop Processing 3.7.3.1. Next-Hop-Self

3.7.3.2. Manual Next-Hop Modification

3.7.3.3. Third Party Next Hop

3.7.3.4. Next Hop Tracking

3.7.3.5. Conditional Next Hop Tracking

3.7.3.6. BGP Next-Hop Trigger Delay

3.7.4. BGP NLRI Origination 3.7.4.1. Network Statement

3.7.4.2. Redistribution

3.7.4.3. BGP Redistribute Internal

3.7.4.4. Conditional Advertisement

3.7.4.5. Conditional Route Injection

3.7.5. BGP Bestpath Selection 3.7.5.1. Weight

3.7.5.2. Local Preference

3.7.5.3. AS-Path Prepending

3.7.5.4. Origin

3.7.5.5. MED

3.7.5.6. Always Compare MED

3.7.5.7. Deterministic MED

3.7.5.8. AS-Path Ignore

3.7.5.9. Router-IDs

3.7.5.10. DMZ Link Bandwidth

3.7.5.11. Maximum AS Limit

3.7.5.12. Multipath

3.7.6. BGP Aggregation 3.7.6.1. BGP Auto-Summary

3.7.6.2. Aggregation

3.7.6.3. Summary Only

3.7.6.4. Suppress Map

3.7.6.5. Unsuppress Map

3.7.6.6. AS-Set

3.7.6.7. Attribute-Map

3.7.6.8. Advertise Map

3.7.7. BGP Communities 3.7.7.1. Standard

3.7.7.2. Extended

3.7.7.3. No-Advertise

3.7.7.4. No-Export

3.7.7.5. Local-AS

3.7.7.6. Deleting

3.7.8. Filtering 3.7.8.1. Prefix-Lists

3.7.8.2. Standard Access-Lists Task

3.7.8.3. Extended Access-Lists

3.7.8.4. Maximum Prefix

3.7.8.5. BGP Regular Expressions

3.7.8.6. Outbound Route Filtering (ORF)

3.7.8.7. Soft Reconfiguration Inbound

3.7.9. AS-Path Manipulation 3.7.9.1. Local AS

3.7.9.2. Local AS Replace-AS/Dual-AS

3.7.9.3. Remove Private AS

3.7.9.4. Allow AS In

3.7.9.5. AS Override

3.7.10. BGP Convergence Optimization 3.7.10.1. BGP Timers Tuning

3.7.10.2. BGP Fast Fallover

3.7.10.3. BGP Prefix Independent Convergence (PIC)

3.7.10.4. BGP Dampening

3.7.10.5. BGP Dampening with Route-Map

3.7.10.6. BGP Add Path

3.7.11. BGP Default Routing

3.7.12. IPv6 BGP

3.7.13. Misc BGP 3.7.13.1. iBGP Synchronization

3.7.13.2. BGP over GRE

3.7.13.3. BGP Backdoor

3.8. Route Redistribution 3.8.1. Metric Based Loops

3.8.2. Administrative Distance Based Loops

3.8.3. Route Tag Filtering

3.8.4. IP Route Profile

3.8.5. Debug IP Routing

3.9. Miscellaneous Routing Features

3.10. IS-IS *

4. VPN

4.1. MPLS 4.1.1. VRF Lite

4.1.2. MPLS LDP

4.1.3. MPLS Ping

4.1.4. MPLS Traceroute

4.1.5. MPLS Label Filtering

4.1.6. MP-BGP VPNv4

4.1.7. MP-BGP Prefix Filtering

4.1.8. PE-CE Routing with RIP

4.1.9. PE-CE Routing with OSPF

4.1.10. OSPF Sham-Link

4.1.11. PE-CE Routing with EIGRP

4.1.12. EIGRP Site-of-Origin

4.1.13. PE-CE Routing with BGP

4.1.14. BGP SoO Attribute

4.1.15. Internet Access

4.1.16. Route Leaking

4.1.17. MPLS VPN Performance Tuning

4.1.18. AToM *

4.1.19. L2TPV3 *

4.1.20. VPLS *

4.2. IPsec LAN-to-LAN 4.2.1. ISAKMP Policies

4.2.2. PSK Authentication

4.2.3. Static Crypto Maps

4.2.4. IPsec over GRE

4.2.5. Static VTI

4.2.6. GETVPN *

4.3. DMVPN 4.3.1. Single Hub

4.3.2. NHRP

4.3.3. DMVPN Phase 1, 2, & 3

4.3.4. QoS Profiles

4.3.5. QoS Pre-Classify

5. Multicast

5.1. Layer 2 Multicast 5.1.1. IGMPv1, IGMPv2, IGMPv3

5.1.2. IGMP Snooping

5.1.3. IGMP Querier Election

5.1.4. IGMP Filtering

5.1.5. IGMP Proxy

5.1.6. IGMP Timers

5.1.7. Multicast VLAN Registration

5.1.8. IGMP Profiles

5.2. IPv4 Multicast Routing 5.2.1. PIM Dense Mode

5.2.2. PIM Sparse Mode

5.2.3. PIM Sparse Dense Mode

5.2.4. Static RP

5.2.5. Auto-RP 5.2.5.1. Auto-RP

5.2.5.2. Sparse Dense Mode

5.2.5.3. Auto-RP Listener

5.2.5.4. Multiple Candidate RPs

5.2.5.5. Filtering Candidate RPs

5.2.5.6. RP & MA placement problems

5.2.6. Bootstrap Router 5.2.6.1. BSR

5.2.6.2. Multiple RP Candidates

5.2.6.3. Multiple BSR Candidates

5.2.7. Source Specific Multicast

5.2.8. Bidirectional PIM

5.2.9. Group to RP Mapping

5.2.10. Anycast RP

5.2.11. MSDP

5.2.12. MSDP SA Filtering

5.2.13. Multicast TTL Scoping

5.2.14. Auto-RP & BSR Boundary Filtering

5.2.15. PIM Accept Register Filtering

5.2.16. PIM Accept RP Filtering

5.2.17. RPF Failure

5.2.18. Registration Failure

5.2.19. PIM DR Election

5.2.20. PIM DF Election

5.2.21. PIM Assert

5.2.22. Static Multicast Routes

5.2.23. Multicast BGP

5.2.24. PIM NBMA Mode

5.2.25. Multicast over GRE

5.2.26. Stub Multicast Routing

5.2.27. Multicast Helper Map

5.2.28. Multicast Rate Limiting

5.2.29. Multicast BGP

5.3. IPv6 Multicast Routing * 5.3.1. IPv6 PIM and MLD *

5.3.2. IPv6 PIM BSR *

5.3.3. IPv6 Embedded RP *

5.3.4. IPv6 SSM *

6. QoS

6.1. Hold-Queue and Tx-Ring

6.2. Weighted Fair Queuing (WFQ)

6.3. Selective Packet Discard

6.4. Payload Compression on Serial Links

6.5. Generic TCP/UDP Header Compression

6.6. MLP Link Fragmentation and Interleaving

6.7. MQC Classification and Marking

6.8. MQC Bandwidth Reservations and CBWFQ

6.9. MQC Bandwidth Percent

6.10. MQC LLQ and Remaining Bandwidth Reservations

6.11. MQC WRED

6.12. MQC Dynamic Flows and WRED

6.13. MQC WRED with ECN

6.14. MQC Class-Based Generic Traffic Shaping

6.15. MQC Class-Based GTS and CBWFQ

6.16. MQC Single-Rate Three-Color Policer

6.17. MQC Hierarchical Policers

6.18. MQC Two-Rate Three-Color Policer

6.19. MQC Peak Shaping

6.20. MQC Percent-Based Policing

6.21. MQC Header Compression

6.22. Voice Adaptive Traffic Shaping

6.23. Voice Adaptive Fragmentation

6.24. Advanced HTTP Classification with NBAR

6.22. Layer 2 QoS *

7. Security

7.1. Layer 2 Security 7.1.1. Port Protection

7.1.2. Private VLANs

7.1.3. Port Based ACLs

7.1.4. VLAN ACLs for IP Traffic

7.1.5. VLAN ACLs for Non-IP Traffic

7.1.6. Storm Control

7.1.7. Port Security

7.1.8. HSRP and Port-Security

7.1.9. ErrDisable Recovery

7.1.10. DHCP Snooping

7.1.11. DHCP Snooping and the Information Option

7.1.12. Dynamic ARP Inspection

7.1.13. IP Source Guard

7.1.14. 802.1x *

7.2. Management Plane Security 7.2.1. AAA Authentication Lists

7.2.2. AAA Exec Authorization

7.2.3. AAA Local Command Authorization

7.2.4. Controlling Terminal Line Access

7.2.5. IOS Login Enhancements

7.2.6. IOS Resilient Configuration

7.2.7. Role-Based CLI

7.2.8. AAA with TACACS+ and RADIUS *

7.3. Control Plane Security 7.3.1. Controlling the ICMP Messages Rate

7.3.2. Control Plane Policing

7.3.3. Control Plane Protection (CPPr)

7.3.4. Control Plane Host

7.4. Data Plane Security 7.4.1. Traffic Filtering Using Standard Access-Lists

7.4.2. Traffic Filtering Using Extended Access-Lists

7.4.3. Traffic Filtering Using Reflexive Access-Lists

7.4.4. IPv6 Traffic Filter

7.4.5. Filtering Fragmented Packets

7.4.6. Filtering Packets with Dynamic Access-Lists

7.4.7. Filtering Traffic with Time-Based Access Lists

7.4.8. Traffic Filtering with Policy-Based Routing

7.4.9. Preventing Packet Spoofing with uRPF

7.4.10. Using NBAR for Content-Based Filtering

7.4.11. TCP Intercept

7.4.12. TCP Intercept Watch Mode

7.4.13. Packet Logging with Access-Lists

7.4.14. IP Source Tracker

7.4.15. Router IP Traffic Export (RITE)

7.4.16. IOS ACL Selective IP Option Drop

7.4.17. Flexible Packet Matching

7.4.18. IPv6 First Hop Security 7.4.18.1. RA guard

7.4.18.2. DHCP guard

7.4.18.3. Binding table

7.4.18.4. Device tracking

7.4.18.5. ND inspection/snooping

7.4.18.6. Source guard

7.4.18.7. PACL

8. System Management

8.1. Device Management 8.1.1. Console

8.1.2. Telnet 8.1.2.1. Telnet Service Options

8.1.3. SSH

8.1.4. Terminal Line Settings

8.1.5. HTTP Server and Client

8.1.6. FTP Server and Client

8.1.7. TFTP Server and Client

8.1.8. SNMP 8.1.8.1. SNMPv2 Server

8.1.8.2. SNMPv2c Access Control

8.1.8.3. SNMP Traps and Informs

8.1.8.4. CPU and Memory Thresholds

8.1.8.5. SNMPv3

8.1.8.6. SNMP MAC Address Notifications

8.1.8.7. SNMP Notifications of Syslog Messages

8.2. Logging 8.2.1. System Message Logging

8.2.2. Syslog Logging

8.2.3. Logging Counting and Timestamps

8.2.4. Logging to Flash Memory

8.2.5. Configuration Change Notification and Logging

8.2.6. Configuration Archive and Rollback

8.2.7. Logging with Access-Lists

8.3. NTP 8.3.1. NTP

8.3.2. NTP Authentication

8.3.3. NTP Access Control

8.3.4. NTP Version 3 & 4

8.4. EEM 8.4.1. KRON Command Schedule

8.4.2. EEM Scripting: Interface Events

8.4.3. EEM Scripting: Syslog Events

8.4.4. EEM Scripting: CLI Events

8.4.5. EEM Scripting: Periodic Scheduling

8.4.6. EEM Scripting: Advanced Features

8.4.7. EEM Applets

8.5. Miscellaneous System Management 8.5.1. Auto-Install over LAN Interfaces using DHCP

8.5.2. Auto-Install over LAN Interfaces Using RARP

8.5.3. IOS Menus

8.5.4. IOS Banners

8.5.5. Exec Aliases

8.5.6. TCP Keepalives

8.5.7. Generating Exception Core Dumps

8.5.8. Conditional Debugging

8.5.9. Tuning Packet Buffers

8.5.10. CDP

8.5.11. Remote Shell

9. Network Services

9.1. Object Tracking 9.1.1. IP SLA

9.1.2. Enhanced Object Tracking

9.1.3. Tracking Lists

9.2. First Hop Redundancy Protocols 9.2.1. HSRP

9.2.2. VRRP

9.2.3. GLBP

9.2.4. Router Redundancy and Object Tracking

9.2.5. IPv6 RS & RA Redundancy

9.3. DHCP 9.3.1. DHCP Server

9.3.2. DHCP Client

9.3.3. DHCP Relay

9.3.4. DHCP Host Pools

9.3.5. DHCP On-Demand Pool

9.3.6. DHCP Proxy

9.3.7. DHCP Information Option

9.3.8. DHCP Authorized ARP

9.3.9. SLAAC/DHCPv6 interaction

9.3.10. Stateful & Stateless DHCPv6

9.3.11. DHCPv6 prefix delegation

9.4. DNS 9.4.1. IOS Authoritative DNS Server

9.4.2. IOS Caching DNS Server

9.4.3. IOS DNS Spoofing

9.5. NAT 9.5.1. Basic NAT

9.5.2. NAT Overload

9.5.3. NAT with Route Maps

9.5.4. Static NAT

9.5.5. Static PAT

9.5.6. Static NAT and IP Aliasing

9.5.7. Static Policy NAT

9.5.8. NAT with Overlapping Subnets

9.5.9. TCP Load Distribution with NAT

9.5.10. Stateful NAT with HSRP

9.5.11. Stateful NAT with Primary/Backup

9.5.12. NAT Virtual Interface

9.5.13. NAT Default Interface

9.5.14. Reversible NAT

9.5.15. Static Extendable NAT

9.5.16. NAT ALG

9.6. Traffic Accounting 9.6.1. IP Precedence Accounting

9.6.2. IP Output Packet Accounting

9.6.3. IP Access Violation Accounting

9.6.4. MAC Address Accounting

9.7. NetFlow 9.7.1. Netflow v5 & v9

9.7.2. Netflow Ingress and Egress

9.7.3. Netflow Top Talkers

9.7.4. Netflow Aggregation Cache

9.7.5. Netflow Random Sampling

9.7.6. Netflow Input Filters

9.7.7. Netflow Export

9.8. Miscellaneous Network Services 9.8.1. Proxy ARP

9.8.2. IRDP

9.8.3. Router ICMP Settings 9.8.3.1. TCP Optimization

9.8.4. IOS Small Services and Finger

9.8.5. Directed Broadcasts and UDP Forwarding

9.8.6. NBAR Protocol Discovery

9.8.7. IP Event Dampening

9.8.8. Conditional Debugging

9.8.9. Embedded Packet Capture

9.8.10. Interpreting Packet Captures

Last edited by daniel.larsson on Mon Jun 22, 2015 12:48 am; edited 15 times in total

Layer 3 - How to approach the Studyplan, The INE CCIE RSv5 Workbook and their ATC (Advanced Technology Class)

Layer 3 - How to approach the Studyplan, The INE CCIE RSv5 Workbook and their ATC (Advanced Technology Class)

Layer 3 IP Routing

Technology: Protocol Independent IPv4 Routing

Since this is my first study notes on the Layer 3 part I need to remember how to approach all the topics since it's a very big scope. Roughly estimated it is 85% of the lab that's going to be IP-based.

My study technique above was written down to help me Learning in a structured way and for easy looking back. As I understood quickly the same approach for Layer 3 as I did with Layer 2 is not possible because of many reasons.

So what I came up with that worked the best for me was a way of researching in advance which topics i should study Before watching the videos and looking for information to match the study-plan. It took me a lot of trial and error Before figuring out a good way to achieve a path that was aligned with the study-plan and the CCIE RSv5 Blueprint topics released by Cisco. Now I decided a long time ago to go with INE and their way of studying because I feel that they have developed some very good books and videos, and most importantly. I find their instructors to be very detailed and interesting and they cover the topics from a CCIE perspective. I liked their way best of all other vendors i tried!

There was also the fact that i've met many CCIE's that's also been using INE and they recommended their way!

Now my problem with working with INE is that:

-They have a Workbook.

-They have an Advanced Technology Class video series.

-They have their topology and their rack rentals.

-And they have their study-plan developed by Brian McGahan.

The problem is, their workbook and their ATC (Advanced Technology Class) videos are not aligned with their study-plan!

So in the beginning i was quite....disturbed by the fact that their study-plan is not aligned with either the workbook or their ATC. It was difficult to really have a good plan that followed theory, instructors and practice labs.

After a lot of trying different methods I came to the conclusion that this was what I had to do go keep Everything aligned for a better learning strategy:

-Step 1. Look at the INE RSv5 Workbook and see which labs come next.

-Step 2. Look at the study plan and try to match the topic to the next labs in the Workbook, for example IP-Routing, Protocol Independent IPv4 Routing.

-Step 3. Don't Watch, but look at the various powerpoint-presentation/slides to learn which topics are covered in the ATC-video. Write these down!

-Step 4. Research about where you can study the different topics covered in the ATC-video, and write them down. Be sure to cover them all!

-Step 5. Study the theory about these topics.

-Step 6. Watch the same ATC-video completely.

-Step 7. Do the INE RSv5 Workbook labs that covers all the topics discussed in the ATC-video.

To better understand why I Think this is needed, let's give an example:

1. I looked at the INE RSv5 Workbook and came to the conslusion that after the Layer 2 labs the next labs that followed were these:

...and so on

(i usually check a few labs a head to try and group simular topics in a single study-session)

2. I then looked at the study plan and see that these labs at least covers these topics:

..3.1. Protocol Independent IPv4 Routing

....3.1.1. IPv4 Addressing

....3.1.2. IPv4 ARP

Obviously we need to understand IPv4 addresses and how to configure them to set up any kind of routing in the first place!

Also since Routing requires some sort of address-resolution to work, we also need to completely understand ARP to even send a packet towards an interface!

(ARP is arguably a layer 2 topic, it's definately a layer 2 protocol but it makes the most sense to learn ARP while studying IP!)

....3.1.3. Longest Match Routing

....3.1.5. Static Routing

We need to understand how the router matches a packet in the routing-table before forwarding it.

And also the very basic of routing is to configure a static-route. Since the lab said "routing to" it's logical to assume that knowledge of static-routing is needed.

....3.1.6. Route Recursion

....3.1.8. Default Routing

....3.1.10. Floating Static Routes

....3.1.4. Administrative Distance

....3.1.7. Egress Interface vs. Next Hop Static Routing

....3.1.9. CEF

Here's where it gets a little bit tricker. Since this is CCIE Study notes I will assume that at least CCNP-knowledge is required as a foundation before starting.

Route recursion is needed to understand how a route is ultimately chosen and resolves an exit-interface.

Default routing says itself, if there are no longest-match specific route in the table we need to know how to tell the router what to do with packets that don't match a route.

Floating Static Route - simple enough since there was a lab that said so!

Administrative Distance - because it has to do with how the router chooses which route to install in the routing-table, and the labs were about routing.

Egress Interface s Next-Hop Static Routing - More difficult to put here, but since the labs said "Multipoint Broadcast Interfaces" and "Routing to NBMA Interfaces" i know this fits here because of how ARP works.

3. I just look at the ATC-video to see which topics is covered and break out the details. In this case:

IP Routing Process Overview

..Routing

..Switching

..Encapsulation

Routing to a Next-Hop

..Recurse to the interface

..If multipoint, resolve next-hop

Routing to a Multipoint Interface

..Recursion not required

..Resolve address for final destination

Routing to a Point-to-Point interface

Default Routing

..To a next-hop

..To a Multipoint interface

..To a point-to-point interface

Note: At this stage it's possible that you may have to add topics to study for step 2.

4. I try to find the best places available to study all of these topics Before i'm ready to Watch the video.

Note: In this perticular case it's some "basic" topics. Too basic to be well documented on Cisco's webpage.

5. I then study those tpoics and write down what i learn and how much time it took me.

6. I then Watch the ATC-video too get in-depth knowledge from an instructor and hopefully learn something that i missed along the way!

7. Finally I do the labs with having a good strong Foundation knowledge about the topics.

Note: Some of the labs including the topics can be very basic, some of them extremelyl advanced. Here you would have to break down the topic and create your own labs and mess with it. Break it, solve it, learn it. For the most part INE does a very good job by slowly adding the difficulties, but some topics are just very little labs on. So be honest with yourself when doing the labs!

This may seem very time consuming at first, but here's why i recommend to do it this way:

1. For a starter you get a study-plan and a topic-by-topic study that is 100% aligned with the INE Workbook and their ATC-videos.

(i don't know about you, but I think it's much easier to prepare this way instead of jumping around topics)

2. You will also know exactly which topics you have studied for your study-plan, whether you are using your own or the Cisco RSv5 blueprint topics.

(Among all the things i've done during my studies so far, this is probably the most important one so I know what i've left!)

3. You will learn how to navigate the cisco webpage. I can not even tell you how extremely important that is since it will be available on the lab! You will be frustrated at first, but you will know where to find the topics IF you require it during the lab!

Technology: Protocol Independent IPv4 Routing

Since this is my first study notes on the Layer 3 part I need to remember how to approach all the topics since it's a very big scope. Roughly estimated it is 85% of the lab that's going to be IP-based.

My study technique above was written down to help me Learning in a structured way and for easy looking back. As I understood quickly the same approach for Layer 3 as I did with Layer 2 is not possible because of many reasons.

So what I came up with that worked the best for me was a way of researching in advance which topics i should study Before watching the videos and looking for information to match the study-plan. It took me a lot of trial and error Before figuring out a good way to achieve a path that was aligned with the study-plan and the CCIE RSv5 Blueprint topics released by Cisco. Now I decided a long time ago to go with INE and their way of studying because I feel that they have developed some very good books and videos, and most importantly. I find their instructors to be very detailed and interesting and they cover the topics from a CCIE perspective. I liked their way best of all other vendors i tried!

There was also the fact that i've met many CCIE's that's also been using INE and they recommended their way!

Now my problem with working with INE is that:

-They have a Workbook.

-They have an Advanced Technology Class video series.

-They have their topology and their rack rentals.

-And they have their study-plan developed by Brian McGahan.

The problem is, their workbook and their ATC (Advanced Technology Class) videos are not aligned with their study-plan!

So in the beginning i was quite....disturbed by the fact that their study-plan is not aligned with either the workbook or their ATC. It was difficult to really have a good plan that followed theory, instructors and practice labs.

After a lot of trying different methods I came to the conclusion that this was what I had to do go keep Everything aligned for a better learning strategy:

-Step 1. Look at the INE RSv5 Workbook and see which labs come next.

-Step 2. Look at the study plan and try to match the topic to the next labs in the Workbook, for example IP-Routing, Protocol Independent IPv4 Routing.

-Step 3. Don't Watch, but look at the various powerpoint-presentation/slides to learn which topics are covered in the ATC-video. Write these down!

-Step 4. Research about where you can study the different topics covered in the ATC-video, and write them down. Be sure to cover them all!

-Step 5. Study the theory about these topics.

-Step 6. Watch the same ATC-video completely.

-Step 7. Do the INE RSv5 Workbook labs that covers all the topics discussed in the ATC-video.

To better understand why I Think this is needed, let's give an example:

1. I looked at the INE RSv5 Workbook and came to the conslusion that after the Layer 2 labs the next labs that followed were these:

- Routing to Multipoint Broadcast Interfaces

- Routing to NBMA Interfaces

- Longest Match Routing

- Floating Static Routes

...and so on

(i usually check a few labs a head to try and group simular topics in a single study-session)

2. I then looked at the study plan and see that these labs at least covers these topics:

..3.1. Protocol Independent IPv4 Routing

....3.1.1. IPv4 Addressing

....3.1.2. IPv4 ARP

Obviously we need to understand IPv4 addresses and how to configure them to set up any kind of routing in the first place!

Also since Routing requires some sort of address-resolution to work, we also need to completely understand ARP to even send a packet towards an interface!

(ARP is arguably a layer 2 topic, it's definately a layer 2 protocol but it makes the most sense to learn ARP while studying IP!)

....3.1.3. Longest Match Routing

....3.1.5. Static Routing

We need to understand how the router matches a packet in the routing-table before forwarding it.

And also the very basic of routing is to configure a static-route. Since the lab said "routing to" it's logical to assume that knowledge of static-routing is needed.

....3.1.6. Route Recursion

....3.1.8. Default Routing

....3.1.10. Floating Static Routes

....3.1.4. Administrative Distance

....3.1.7. Egress Interface vs. Next Hop Static Routing

....3.1.9. CEF

Here's where it gets a little bit tricker. Since this is CCIE Study notes I will assume that at least CCNP-knowledge is required as a foundation before starting.

Route recursion is needed to understand how a route is ultimately chosen and resolves an exit-interface.

Default routing says itself, if there are no longest-match specific route in the table we need to know how to tell the router what to do with packets that don't match a route.

Floating Static Route - simple enough since there was a lab that said so!

Administrative Distance - because it has to do with how the router chooses which route to install in the routing-table, and the labs were about routing.

Egress Interface s Next-Hop Static Routing - More difficult to put here, but since the labs said "Multipoint Broadcast Interfaces" and "Routing to NBMA Interfaces" i know this fits here because of how ARP works.

3. I just look at the ATC-video to see which topics is covered and break out the details. In this case:

IP Routing Process Overview

..Routing

..Switching

..Encapsulation

Routing to a Next-Hop

..Recurse to the interface

..If multipoint, resolve next-hop

Routing to a Multipoint Interface

..Recursion not required

..Resolve address for final destination

Routing to a Point-to-Point interface

Default Routing

..To a next-hop

..To a Multipoint interface

..To a point-to-point interface

Note: At this stage it's possible that you may have to add topics to study for step 2.

4. I try to find the best places available to study all of these topics Before i'm ready to Watch the video.

Note: In this perticular case it's some "basic" topics. Too basic to be well documented on Cisco's webpage.

5. I then study those tpoics and write down what i learn and how much time it took me.

6. I then Watch the ATC-video too get in-depth knowledge from an instructor and hopefully learn something that i missed along the way!

7. Finally I do the labs with having a good strong Foundation knowledge about the topics.

Note: Some of the labs including the topics can be very basic, some of them extremelyl advanced. Here you would have to break down the topic and create your own labs and mess with it. Break it, solve it, learn it. For the most part INE does a very good job by slowly adding the difficulties, but some topics are just very little labs on. So be honest with yourself when doing the labs!

This may seem very time consuming at first, but here's why i recommend to do it this way:

1. For a starter you get a study-plan and a topic-by-topic study that is 100% aligned with the INE Workbook and their ATC-videos.

(i don't know about you, but I think it's much easier to prepare this way instead of jumping around topics)

2. You will also know exactly which topics you have studied for your study-plan, whether you are using your own or the Cisco RSv5 blueprint topics.

(Among all the things i've done during my studies so far, this is probably the most important one so I know what i've left!)

3. You will learn how to navigate the cisco webpage. I can not even tell you how extremely important that is since it will be available on the lab! You will be frustrated at first, but you will know where to find the topics IF you require it during the lab!

Layer 3 - Protocol Independent IPv4 Routing PART 1

Layer 3 - Protocol Independent IPv4 Routing PART 1

Layer 3

Technology:

Note before reading:

These study notes for Layer 3 technologies are rather long but that's mainly becuase there is a lot to read before doing labs when you reach Layer 3. Even the very basic parts requires you to thoroughly read through the topics. This specific part of the CCIE RSv5 blueprints covers all the basics, like really basic stuff, so the book required here is at about CCNA-level. I split the Protocol Independent Routing up in two parts, PART 1 i will cover the very basics of routing. Part 2 I will step it up a bit and go for the new/unknown topics.

Protocol Independent IPv4 Routing - PART 1

(Basic Routing Process Overview, IPv4 Addressing, ARP, Longest Match Routing, Administratie Distance, Static Routing, Route Recursion)

Note: A very "broad" topic that involves the very basic of routing from the scratch. Multiple sources are required to cover these topics, some of them from cisco and some of them from books. There's no red line to follow in this part, it's extremely messy!

I will start with IP-addresses and ARP, then move forward to how the routing process work and end this part with how the router selects how to make a decision about a packet.

The main book I found to be most usefull here is the old CCNA Exploration - Network Fundamentals and Routing Protocols and Concepts. Yes this is still very basic topics and there's no other book that explains it better that i've read!

Looking through the topics for this part it's clear to me that they are a foundation topics with the main goal to re-learn some of the basics and be familiar with basic routing before moving on. That's why i'll keep the sources at CCNA/CCNP level and combine it with the IOS configuration guide.

IPv4 Addressing

Technology:

- IPv4 Addressing

- ARP

- Basic Routing Process Overview

- Longest Match Routing

- Administrative Distance

- Static Routing & Egress Interface vs Next Hop Static Routing

- Route Recursion

- Default Routing

- floating Static Routes

- CEF

Note before reading:

These study notes for Layer 3 technologies are rather long but that's mainly becuase there is a lot to read before doing labs when you reach Layer 3. Even the very basic parts requires you to thoroughly read through the topics. This specific part of the CCIE RSv5 blueprints covers all the basics, like really basic stuff, so the book required here is at about CCNA-level. I split the Protocol Independent Routing up in two parts, PART 1 i will cover the very basics of routing. Part 2 I will step it up a bit and go for the new/unknown topics.

Protocol Independent IPv4 Routing - PART 1

(Basic Routing Process Overview, IPv4 Addressing, ARP, Longest Match Routing, Administratie Distance, Static Routing, Route Recursion)

Note: A very "broad" topic that involves the very basic of routing from the scratch. Multiple sources are required to cover these topics, some of them from cisco and some of them from books. There's no red line to follow in this part, it's extremely messy!

I will start with IP-addresses and ARP, then move forward to how the routing process work and end this part with how the router selects how to make a decision about a packet.

The main book I found to be most usefull here is the old CCNA Exploration - Network Fundamentals and Routing Protocols and Concepts. Yes this is still very basic topics and there's no other book that explains it better that i've read!

Looking through the topics for this part it's clear to me that they are a foundation topics with the main goal to re-learn some of the basics and be familiar with basic routing before moving on. That's why i'll keep the sources at CCNA/CCNP level and combine it with the IOS configuration guide.

IPv4 Addressing

- Read through a chapter of the "CCDA Official Certification Book" that covers IPv4 in depth. Of all the sources i looked through I found this to be a good match to cover the basics.

Book: CCDA Official Certification Guide, Chapter 8.

Chapter 8 is named: Internet Protocol Version 4. - Read through Configuration and technology explanations of IPv4 Addressing for the 15.0 IOS release.

http://www.cisco.com/c/en/us/td/docs/ios-xml/ios/ipaddr_ipv4/configuration/15-mt/ipv4-15-mt-book/config-ipv4-addr.html

Learned:

-The Total Length field in the IPv4 header is compared against the outgoing interface MTU-value to determine if the packet needs to be fragmented or not. In other words, if the Total Length value is greater than the outgoing interface MTU-value the packet will be defragmented.

-Defragmentation is permanent all the way to the destination IP-host. The only check that is made is whether or not the Total Length Field is greater or smaller than the outgoing interface MTU-value. This means that a packet can be defragmented multiple times along the path to the final IP-host.

-The ToS field in the IPv4 header has gone through multiple changes over the years:

1981 (RFC 791) - A.k.a the original IP Precedence bits

1992 (RFC 1349) - A.k.a the extended IP Precedence bits

1998 (RFC 2474) - A.k.a the Differentiated Services / Differentiated Services Codepoint bits

2001 (RFC 3168) - A.k.a the DS / DSCP bits with an added Explicit Congestion Notification field

This is what we would call QoS or Quality of service. It's outside the scope of this topic but I'll get back to this part when im studying the QoS parts. What I wrote this down for is because it's good to learn and remember that they are all different names for the same thing, classify traffic so you can prioritize traffic classes independently. Starting with just a few bits (7 traffic classes) in 1981 and up to 6 bits (64 different traffic types) in the latest RFC.

-As could be expected a router requires each individual interface to be configured to belong in a different subnet. However it's possible, by manually adjusting router settings, to allow the configuration to accept commands that would let a router have multiple interfaces in say the 192.168.0.0/24 network. (ip routing disabled, ip addresses can be configured still)

What happens then is that the packet would be un-routable if both interfaces are not connected to the same network-segment. Because the router would not know out which interface to send a packet if the hosts are in different segments but in the same network.

Thus the IOS on a Cisco-router prevents such configuration mistakes from happening. But as meantioned, when disabling ip-routing on the router it's possible to configure ip-adresses still and in that case the IOS does not perform this check.

-It's possible to configure multiple ip-addresses on the same interface for migration purposes. Doing this will make that interface belong to multiple networks and as such it will receive unicast, broadcast and multicast packets destined for both networks.

-When doing subneting subnet 0 is reserved in some IOS-releases. If it's required to use the "subnet 0" subnet then the command "ip subnet-zero" is required.

-One very usefull command that i was unaware of was that it's possible to configure the way the IOS presents the subnet-mask format in. The default mode is the "dotted decimal form", for example 255.255.255.0 .

The command: term ip netmask-format bitcount ... would present it in a CIDR-notation, such as /24 instead!

Note: This command is also possible to set individually for each line (for example line con 0, line vty 4 15 etc)

-It's possible to configure an interface to use the ip address of another interface. This is called the "ip unnumbered" feature and is used to preserv ip-addresses.

This command is only usable ont Point-to-Point WAN-links (non multiaccess interfaces):

configure terminal

int loopback 0

ip address 192.168.0.0 255.255.255.0

int fa0/1

ip unnumbered loopback 0

end

(actually in a real world scenario it's more common to use the ip unnumbered command on the serial interfaces or whichever interface your point-to-point links have)

This will make Fa0/1 use the ip-address configured on loopback 0 interface.

-With newer IOS-releases it's possible to use the Host-address and the Broadcast address for non multiaccess Point-to-Point links. In other words it's possible to use a /31 mask for Point-to-Point links now instead of the previous most commonly used /30 mask.

Time required: 1½ hour.

ARP - Read through a chapter of the "CCNA Exploration - Network Fundamentals Book" that covers the basics of ARP. There are many sources for in depth-explanation of ARP but I believe that if I just have a good solid foundational understanding of ARP it will be enough. I will go more in depth when I come to the Security topics.

Book: CCNA Exploration - Network Fundamentals, Chapter 9.

Chapter 9 is named: Ethernet.

More specifically part 9.7 is called "Address Resolution Protocol" and that was exactly what I was looking for! - Read through Configuration and technology explanations of ARP for the 15.0 IOS release.